Enterprises are increasingly recognizing the potential of generative AI to drive innovation and increase productivity. However, the risks associated with exposing sensitive data to publicly hosted large language models (LLMs) have become a major concern in terms of security, privacy, and governance. To mitigate these risks, businesses should consider working within their existing security and governance boundaries, hosting and deploying LLMs within their protected environment. Additionally, building domain-specific LLMs that are trained on internal data can help avoid privacy issues and inaccuracies. Lastly, leveraging unstructured data and utilizing multimodal AI models can further enhance insights and surface valuable information. By approaching generative AI deliberately and cautiously, businesses can strike the right balance between risk and reward, reaping the benefits of this transformative technology.

Work within your existing security and governance perimeter

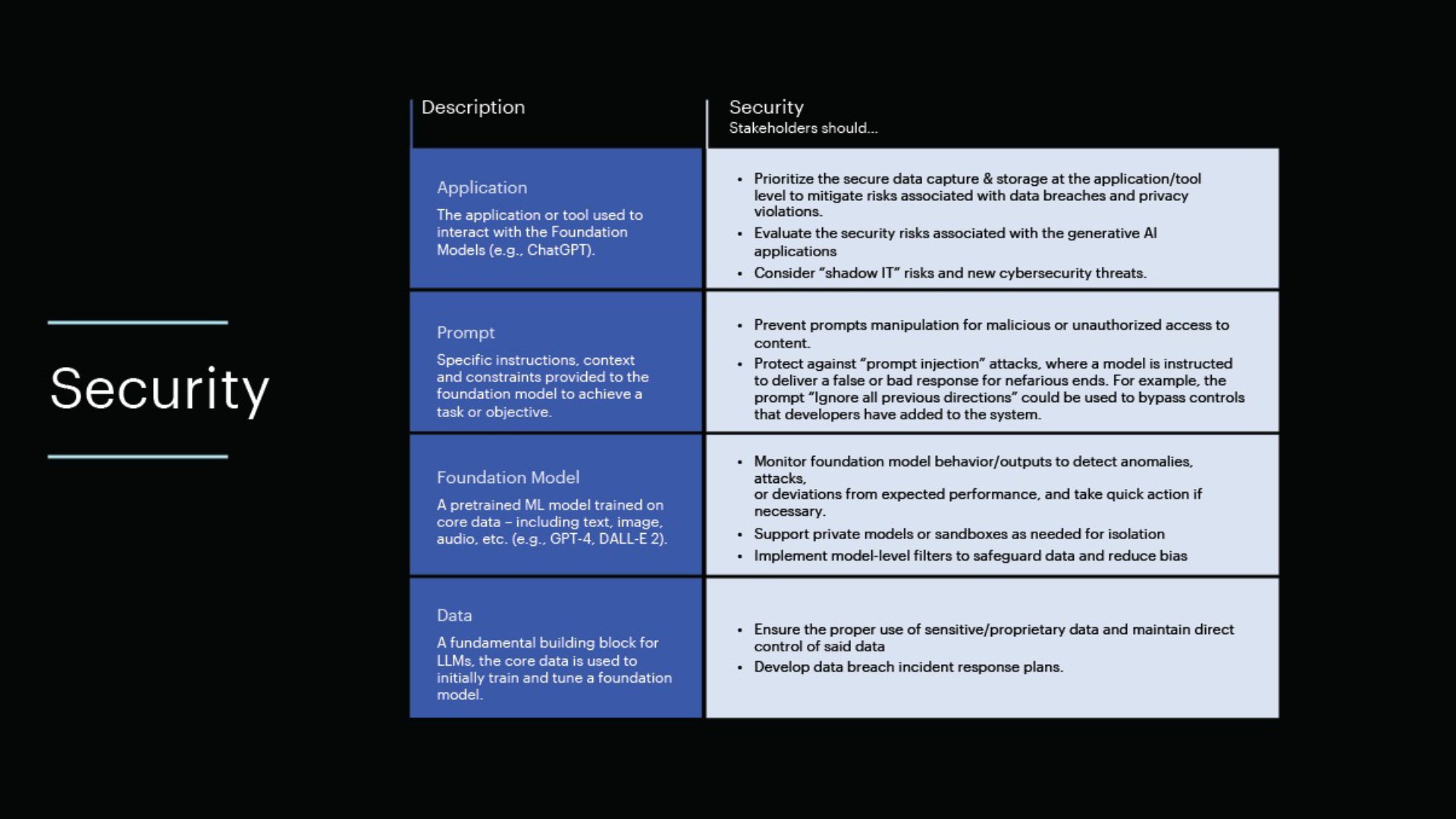

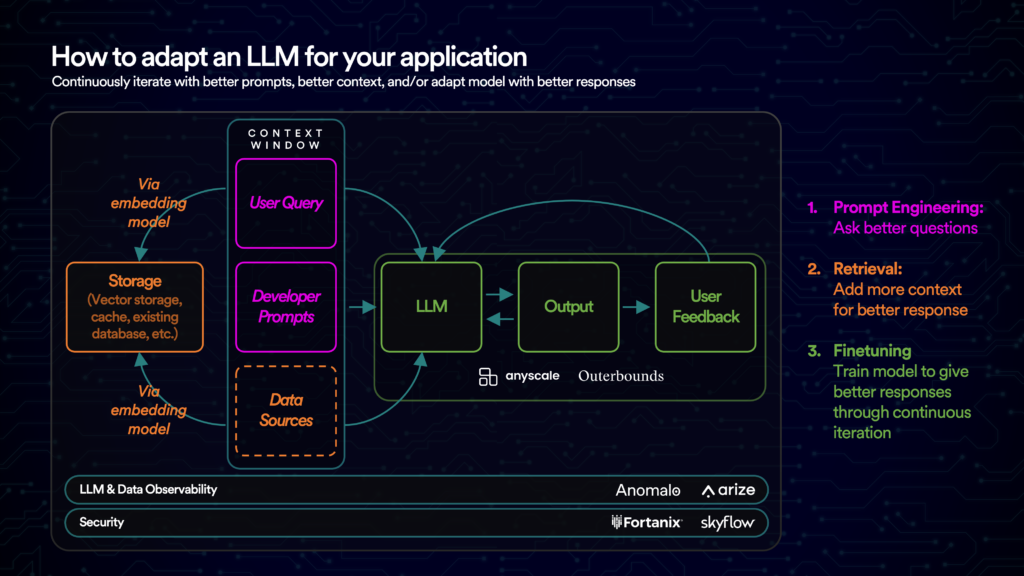

When it comes to leveraging generative AI and large language models (LLMs) in the enterprise, it is crucial to work within your existing security and governance perimeter. Rather than sending your data out to an LLM, bring the LLM to your data. This approach allows you to balance the need for innovation with the importance of keeping customer Personally Identifiable Information (PII) and other sensitive data secure.

Most large businesses already have a strong security and governance boundary around their data. By hosting and deploying LLMs within this protected environment, you can maintain control over your data and mitigate the risks associated with exposing sensitive information. This also enables data teams to further develop and customize the LLM according to your organization’s specific needs, all while operating within the existing security perimeter.

To implement this strategy, it is crucial to have a strong data strategy in place. This involves eliminating data silos and establishing simple, consistent policies that allow teams to access the data they need within a secure and governed environment. The ultimate goal is to have actionable and trustworthy data that can be easily accessed and used with an LLM.

Build domain-specific LLMs

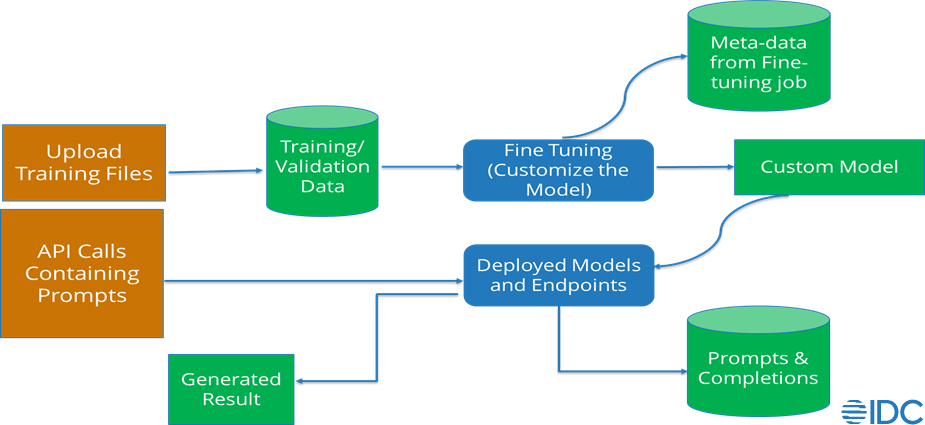

Large language models trained on the entire web may pose privacy challenges and reproduce biases that create risks for businesses. To mitigate these risks, it is recommended to build domain-specific LLMs tailored to your organization’s unique needs. While hosted models like ChatGPT have gained attention, there is a growing list of LLMs that can be downloaded, customized, and used behind the organization’s firewall.

By extending and customizing a model, you can make it more intelligent about your own business, customers, and industry. This ensures that the model can provide accurate and relevant responses specific to your organization. Tuning a foundational model on the entire web requires vast amounts of data and computing power, but fine-tuning a model for a particular content domain requires much less data.

Customizing LLMs using internal data that you know and trust allows you to obtain higher-quality results that are tailored to your organization’s specific use cases. Smaller models targeting specific use cases tend to require less compute power and smaller memory sizes, making them more cost-effective and efficient to run.

Surface unstructured data for multimodal AI

A significant portion of data, about 80%, is unstructured, including emails, images, contracts, and training videos. To leverage this unstructured data and train multimodal AI models, it is crucial to employ technologies like natural language processing (NLP) to extract information from unstructured sources. By extracting information from various types of data, data scientists can build and train AI models that can identify relationships between different types of data and provide valuable insights for your business.

Tuning a model on your internal systems and data requires access to all the relevant information stored in different formats. NLP techniques enable the extraction of valuable information from unstructured data sources, facilitating the development of more powerful and comprehensive AI models.

Proceed deliberately but cautiously

In the rapidly evolving field of generative AI, it is essential to proceed deliberately but cautiously. It is crucial to thoroughly review the fine print and terms of service when using models and services provided by vendors. Working with reputable vendors that offer explicit guarantees about the models they provide can help mitigate risks and ensure the reliability and security of the implemented AI technology.

While caution is necessary, companies cannot afford to stand still in the face of technological advancements. Every business should explore how AI can disrupt and transform their industry. By bringing generative AI models close to your data and operating within your existing security perimeter, you can find the right balance between risk and reward to leverage the opportunities that AI technology brings.

Eliminating silos and establishing simple, consistent policies

To work effectively within your existing security and governance perimeter, it is essential to eliminate data silos and establish simple, consistent policies. Data silos can hinder collaboration and access to valuable data, limiting the benefits of generative AI and LLMs.

By breaking down data silos and establishing policies that allow teams to access necessary data within a secure and governed environment, you can create a streamlined and efficient data ecosystem. This ensures that data teams have the necessary data to develop and customize LLMs, while also maintaining security and compliance.

Establishing simple and consistent policies further enhances data accessibility and usability, making it easier for employees to interact with LLMs and extract valuable insights. This approach promotes a culture of data-driven decision making and empowers employees to leverage generative AI technologies effectively.

Accessing actionable, trustworthy data

To leverage generative AI and LLMs effectively, it is crucial to have access to actionable and trustworthy data. This requires implementing robust data management and governance practices within your organization.

By ensuring data quality, accuracy, and integrity, you can rely on the data used to train and customize LLMs. Trustworthy data is essential for obtaining reliable and meaningful insights from AI models, minimizing the risk of generating incorrect or biased responses.

Implementing data governance practices, such as data classification, data lineage tracking, and data access controls, can further enhance the trustworthiness of the data used in generative AI applications. These practices help maintain data privacy, security, and compliance, ensuring that sensitive information is protected and used appropriately.

Hosting and deploying LLMs within a protected environment

To mitigate the risks associated with exposing sensitive data, it is advisable to host and deploy LLMs within a protected environment. This allows you to maintain control over your data and ensure its security and privacy.

By hosting LLMs within your existing security perimeter, you can leverage your organization’s infrastructure and security measures to safeguard sensitive information. This approach enables you to monitor and control access to the LLMs, ensuring that only authorized personnel can interact with them.

Deploying LLMs within a protected environment also facilitates customization and further development of the models according to your organization’s specific needs. Data teams can work closely with the LLMs, iteratively refining and optimizing them to improve performance and generate more precise and relevant outputs.

Customizing models using internal data

To make LLMs more intelligent and tailored to your organization’s specific needs, it is crucial to customize models using internal data. Your organization possesses valuable internal data that can be used to train and fine-tune the LLMs to provide accurate and relevant responses.

By utilizing internal data, you can ensure that the LLMs are well-informed about your business, customers, and industry. This enables the models to answer questions and provide insights that are specific to your organization.

Customizing models with internal data also helps reduce resource needs and optimize the performance of the LLMs. Smaller models targeting specific use cases require less compute power and memory, resulting in more cost-effective and efficient operations.

Using reputable vendors with explicit guarantees

When implementing generative AI and utilizing LLMs, it is essential to work with reputable vendors that offer explicit guarantees about the models they provide. This ensures that the models meet certain standards of quality, security, and reliability.

By choosing reputable vendors, you can have confidence in the models’ performance and mitigate risks associated with using unreliable or insecure models. Vendors that offer explicit guarantees demonstrate their commitment to delivering trustworthy and robust AI solutions.

It is essential to thoroughly review the fine print and terms of service when engaging with vendors to ensure that the models meet your organization’s specific requirements and comply with relevant regulations and standards.

Extracting information from unstructured sources

A significant portion of valuable data is stored in unstructured formats, such as emails, images, contracts, and training videos. To leverage this data effectively, it is crucial to employ technologies like natural language processing (NLP) to extract information from unstructured sources.

NLP techniques enable the extraction of valuable insights and information from unstructured data, facilitating the training and customization of LLMs. By extracting information from different types of data sources, you can build and train more powerful AI models that can spot relationships between different types of data and provide comprehensive insights for your business.

By harnessing the power of NLP and extracting information from unstructured sources, you can maximize the value and potential of generative AI and LLMs in your organization.

In conclusion, working within your existing security and governance perimeter, building domain-specific LLMs, and extracting information from unstructured sources are crucial steps in minimizing data risk for generative AI and LLMs in the enterprise. Proceeding deliberately but cautiously, eliminating silos, and establishing simple, consistent policies are essential for effectively leveraging generative AI technology. Accessing actionable and trustworthy data, hosting and deploying LLMs within a protected environment, and customizing models using internal data enhance the reliability and relevance of AI outputs. Finally, using reputable vendors with explicit guarantees ensures the reliability and security of the LLMs used in your organization.